Welcome to our first ever progress report! Because the development time for 6.0 is going to be long, and a lot of changes will happen, many users, as well as some on the team, has suggested we do monthly progress reports, and here it is, our first ever.

.NET 6

Last version used .NET Core 3.1 Long Term Support, but on the 9th of November, it is expected that Microsoft launches the next Long Term Support version of .NET.

With .NET 6, we get not only improvements that are only for developers, like file-based namespaces, global usings, and a lot of other.

We get also big speed improvements, up to twice, in some parts of the application.

We also get proper support for the Apple M1 architecture, instead of having to run under Rosetta.

Spectres, but no console ghosts

One of the first changes we've done is rework the console with the help of the Spectre console library.

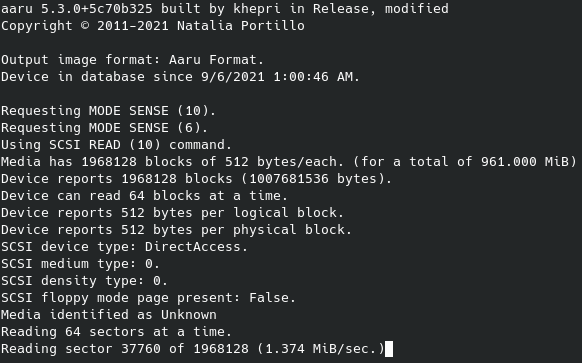

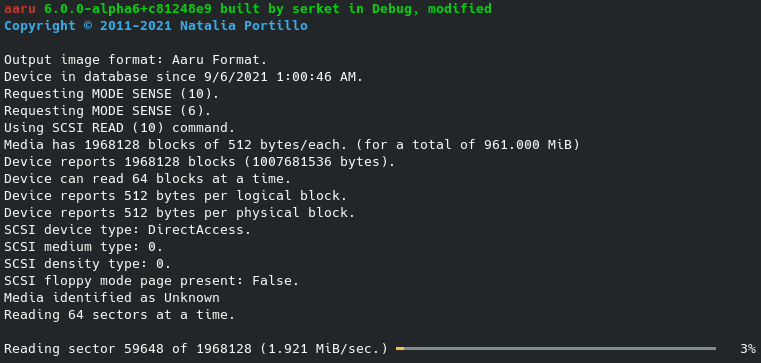

The most noticeable change is progress bars, for example on dumping:

now looks much better, and easier to grasp how the progress is:

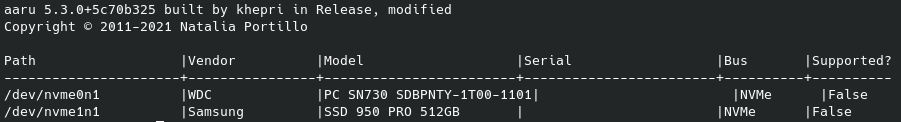

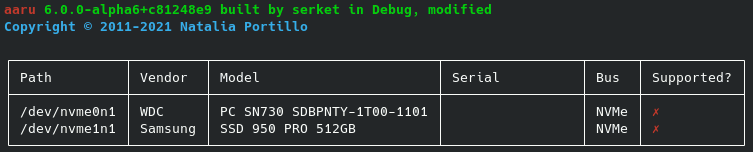

and we also get tables, like in this device list, we had before:

now it is much clearer, without overlaps:

This new console system gives many possibilities, like colors, cursive, and other, that will be implemented as needed.

Of checking integrity and equality

This has taken the first half of the month. In Aaru we support three types of integrity checking: checksums, hashing, and fuzzy hashing.

Checksums

A checksum is a mathematical operation, usually a sum, or an exclusive or (a kind of binary sum), over the data, against a known constant. This gives a unique result, that in the case of any data change, will change completely.

Checksums are extensively used, in compressed archives, in media uses them in the sectors, called EDC, and floppies do as well), and in media images to verify the integrity.

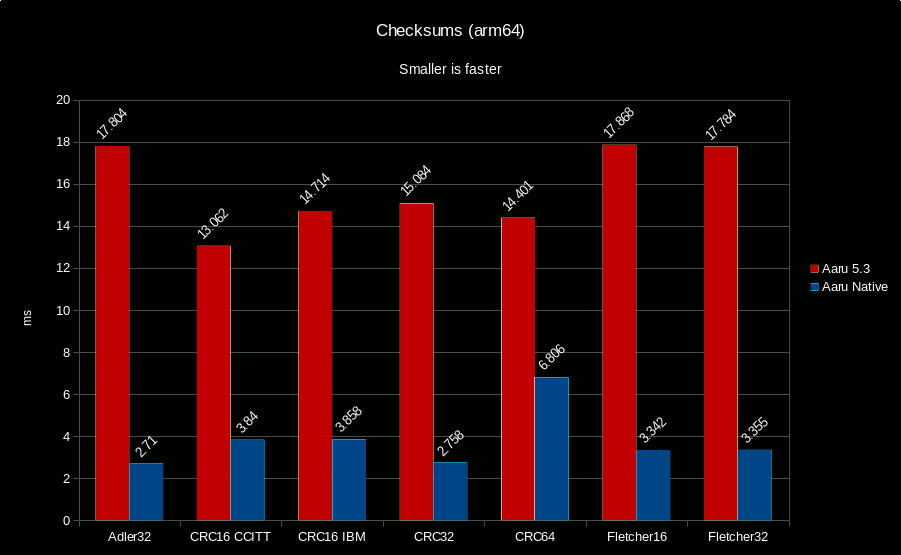

Aaru supports six major checksums: Adler, 16-bit Fletcher, 32-bit Fletcher, 16-bit CRC (CCITT and IBM variants), 32-bit CRC (ISO variant) and 64-bit CRC (ECMA variant).

Up to 5.3 the implementation of all this checksums was the most basic one, operating one byte at a time.

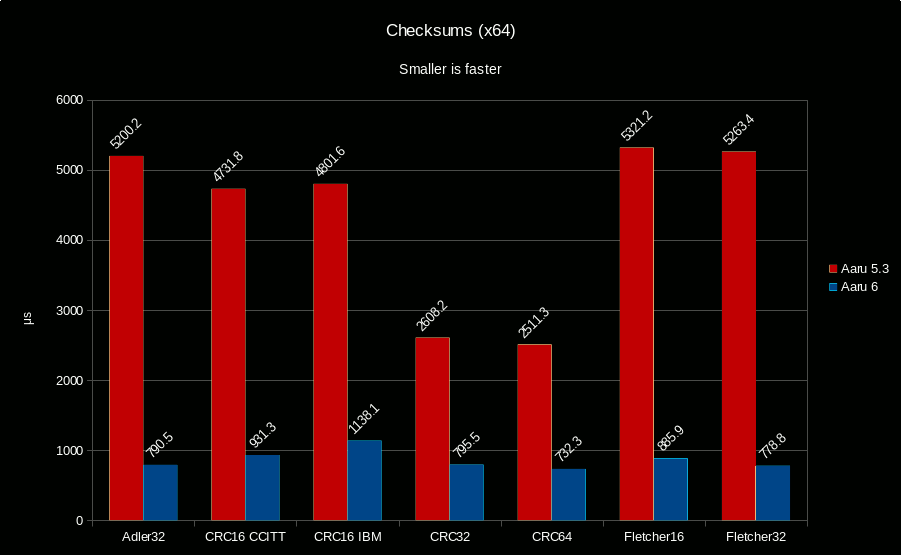

However due to the nature of these checksums, they can be parallelized, and operated in blocks at a time. Combine that with the capability of modern processors to realize the same operation on several data at a single time (aka, SIMD), and we get a massive speed up:

But on less powerful processors, like the Raspberry Pi, the difference can be significantly higher:

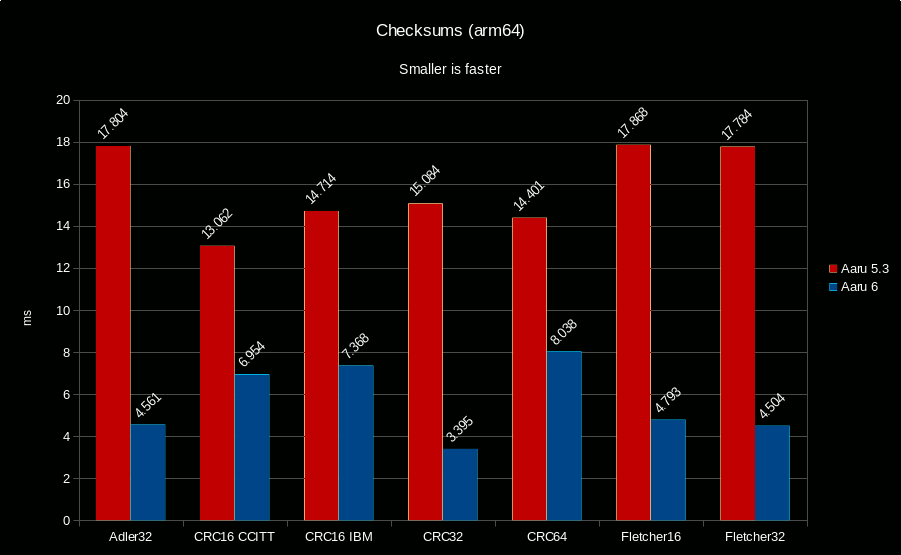

And if you have a 64-bit version of Linux installed on your Pi the difference is still much higher:

However, one of the advantages of using C# to make Aaru, can also become its disadvantage. Because C# is not translated to the native language a processor understands, until it is run, sometimes this translation is not as efficient, as using another language like C. Also, the translator (called JIT) is really only optimized for the most common architecture: Intel/AMD.

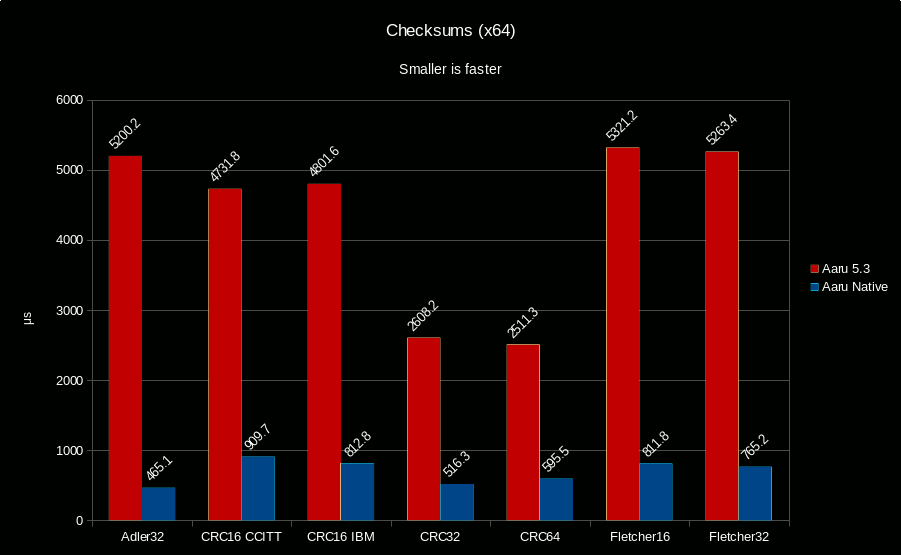

That's why we also added a sublibrary specifically for checksums: Aaru.Checksums.Native. Made in C and compiled with the highest optimizations available, we can see how in the Intel/AMD architecture there is a nice speed-up, compared with the new algorithms compared above:

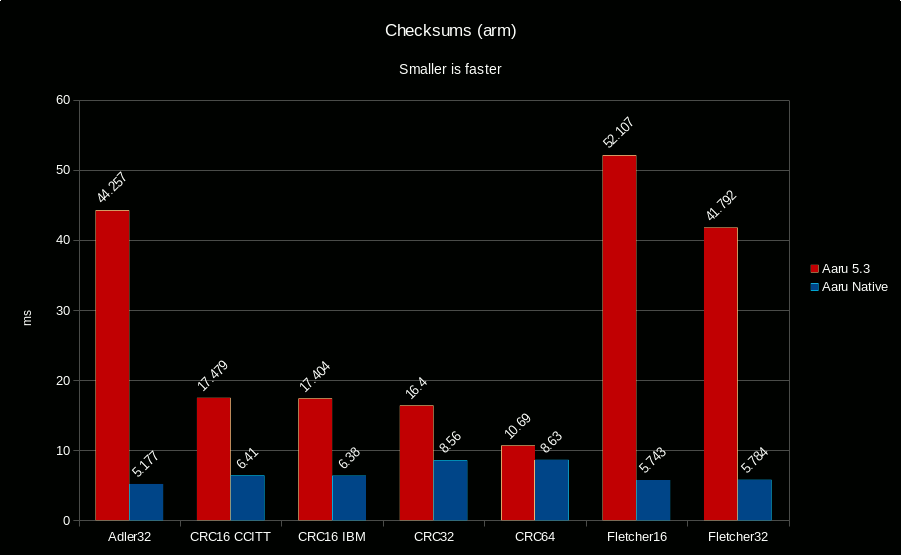

But on an architecture like the Raspberry Pi, where the JIT is much less optimized, the difference can be dramatically higher:

And in the case of 64-bit Raspberry Pi we can see the JIT is even less optimized, and using the native library, gives us a huge speed up:

Hashing

Hashing is a mathetical operation that takes an input and gives an output that is, at least in theory, guaranteed to be able to come only from that input.

And this marks a big difference with checksums, that are designed to detect errors, in relatively small amounts of data, hashings are designed to guarantee authenticity, and be, for a time, impossible to forge (aka, collision).

We tried to find any optimization for current status, that is, calling .NET implementations of hashing, but we found they are just calling the famous OpenSSL library, that's already heavily optimized for all architectures, and we could not make any win from not using them, as we already are.

Fuzzy hashing

This is the elephant in the room. Is a feature that has been in Aaru for long, is enabled by default, and nobody knows what it is at all.

So time is now to explain, what it is.

Fuzzy hashing is a method of handling data so we not only obtain a unique output, but an output, that when compared with another fuzzy hash, tells us the similarity of both inputs.

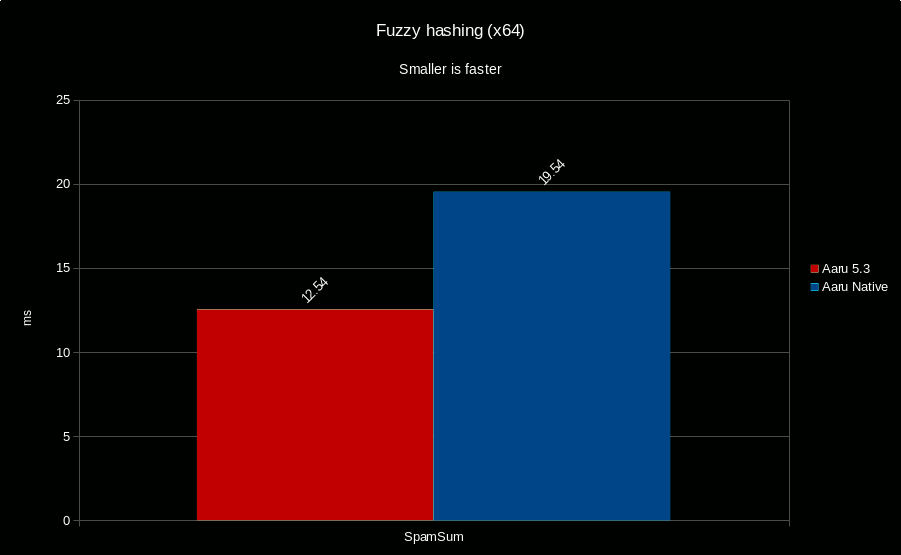

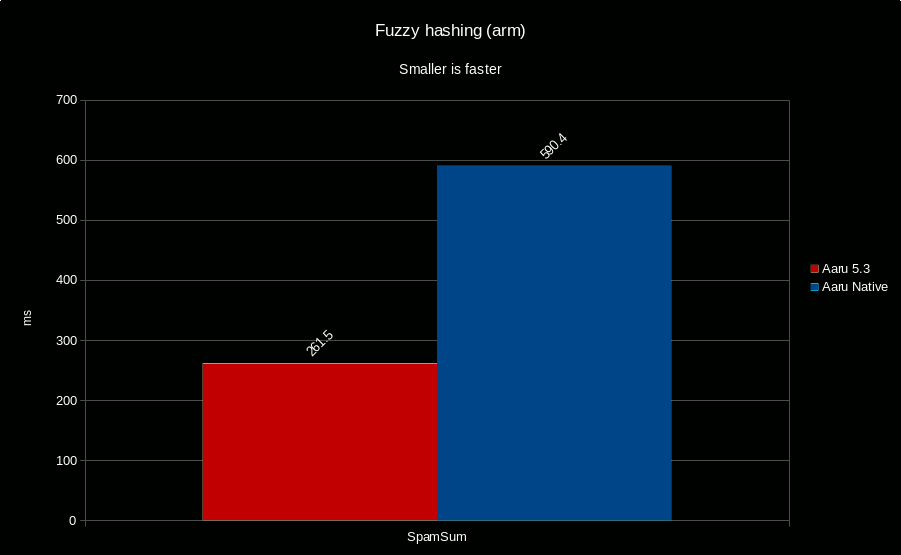

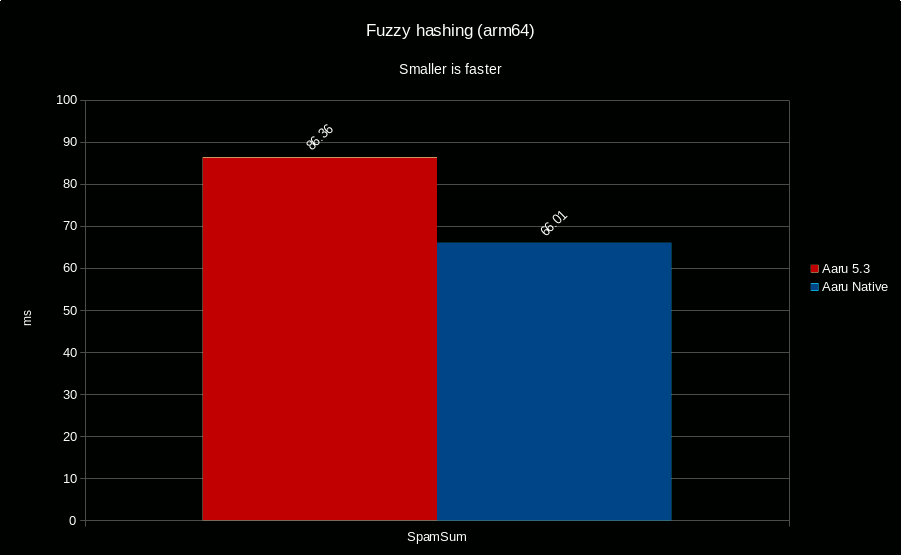

We use SpamSum for this, and as the name suggest, it is widely used for spam detection. But we added and enabled it because it can also tells us if two media images are similar, even if different, for example, a copy with a virus, or cleaned slack space, etc.

We tried to optimize the algorithm, to no avail, we did not find any way to make it faster, and when we tried with native we found an interesting thing:

The JIT made a better job, than the native version!

Same in the 32-bit Raspberry Pi:

However in the less optimized JIT architectures like the 64-bit Raspberry Pi:

Native is at an advantage.

So we will detect the architecture, and use native, where applicable, but as this hashing type is mostly ignored, we will disable it by default.

And if you paid attention you've observed it is, by far, the slowest of all algorithms, so just disabling it unless you need it, is going to give a huge speed up for most users.

Compression

Compression is the method by which some data is transformed into a smaller piece that can be expanded to the original one without any difference.

In Aaru compression is used in many many places, mostly in disk images, with some supporting even different methods for compression.

It is also one of the characteristic features of AaruFormat.

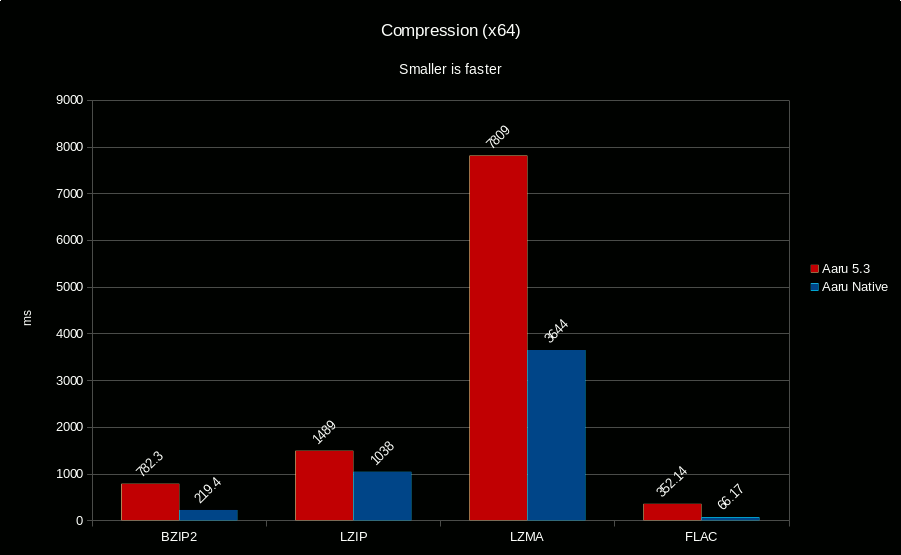

Here we went directly to the native library route. Due to tricks on compression, that are not really replicable in C#, all compression algorithms get a huge advantage here.

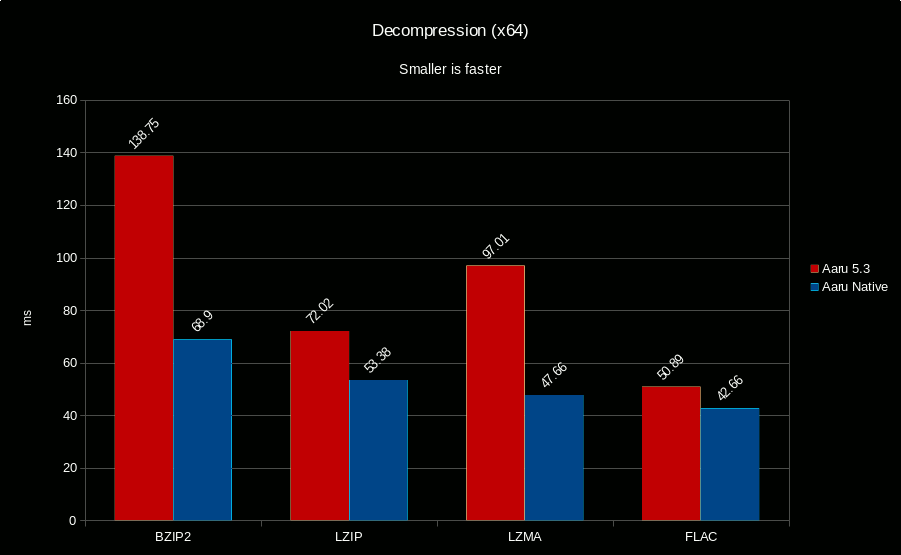

When decompressing we can see the following differences:

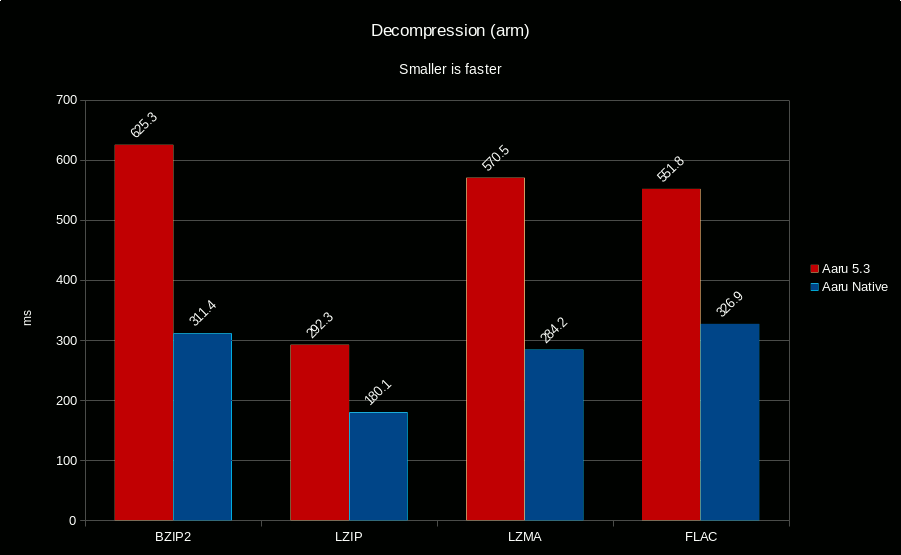

And as with the checksums, the differences get bigger in a low powered Raspberry Pi:

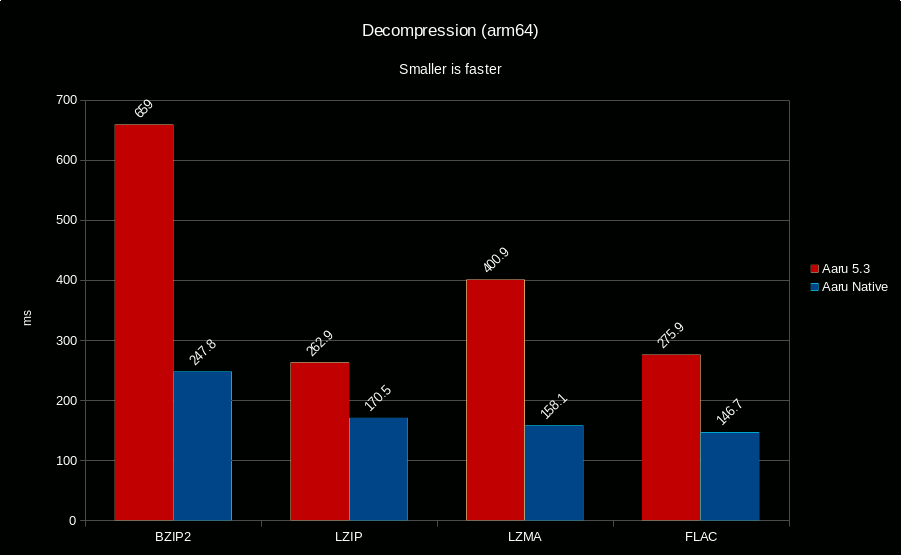

Same with 64-bit Raspberry Pi:

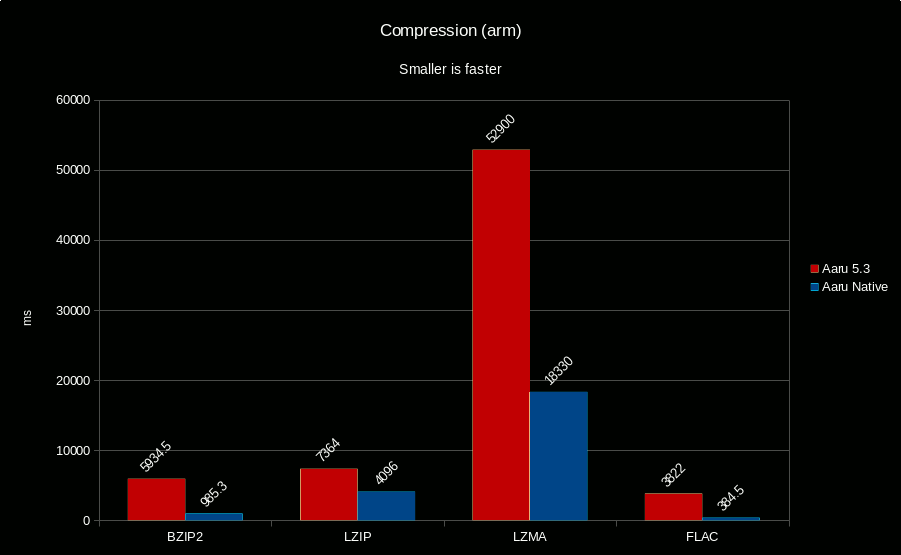

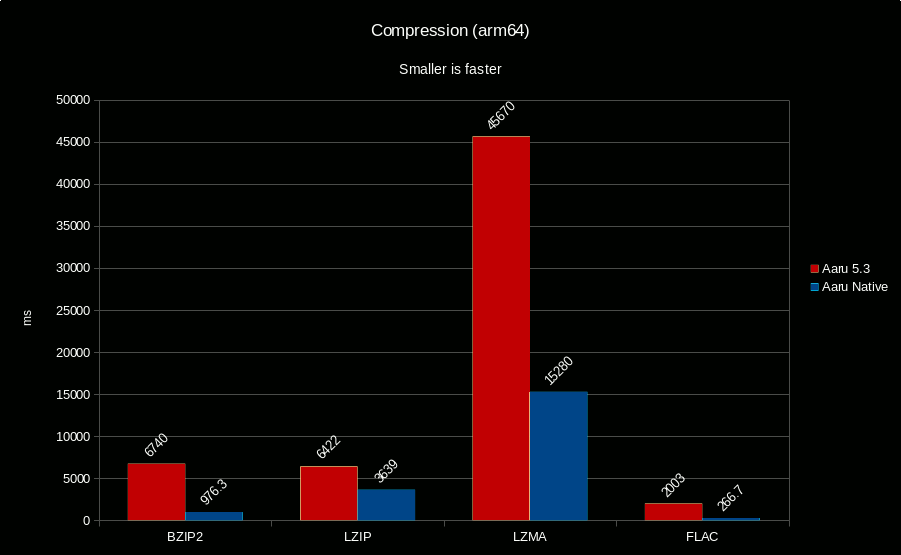

On compressing, specially with the very consuming LZMA algorithm, as used by AaruFormat, the speed up is very helpful:

And incredibly useful in the Raspberry Pi:

As well as in the 64-bit Raspberry Pi:

Conclusion

This month has been all about speed ups!

When Microsoft finally releases .NET 6, we will publish an update with the final graphs, as these were made using the Release Candidate, and fixes in the final version can change the benchmarks.

The hardware used for benchmarking was:

- For Intel/AMD: Lenovo Legion 5, AMD Ryzen 7 5800H, ArchLinux 5.14.14-arch1

- For Raspberry Pi: Raspberry Pi 4, Raspberry Pi OS 5.10.63-v8+